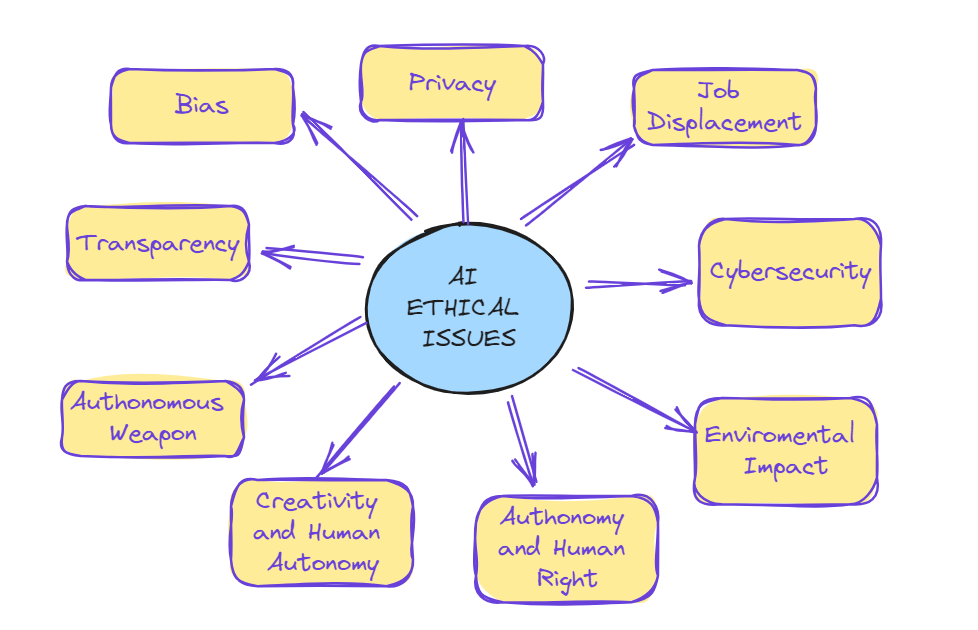

Artificial Intelligence has increased into nearly every facet of modern life, revolutionizing industries, improving efficiency, and transforming the way we live and work. However, with this rapid advancement comes a host of ethical dilemmas that society must grapple with. From issues of bias and privacy to concerns about job displacement and autonomous decision-making, the ethical landscape of AI is fraught with complexities. Below we will try to consider the most critical issues by examining the aspects in greater depth. Most critical aspects in our opinion:

- Bias

- Privacy

- Job Displacement

- Accountability and Transparency

- Autonomous Weapons

- Autonomy and Human Rights

- Environmental Impact

- Cybersecurity

- Creativity and Human Autonomy

Bias

One of the most significant ethical challenges in AI is the perpetuation of bias within algorithms. AI systems learn from historical data, often reflecting the biases present in that data. This can lead to discriminatory outcomes, particularly affecting marginalized communities. For instance, biased facial recognition systems may misidentify individuals of certain ethnicities more frequently than others, leading to unjust consequences. Countries like the United States and European nations are increasingly implementing guidelines and regulations to address bias in AI, advocating for transparency and fairness in algorithmic decision-making.

Privacy

AI systems frequently rely on vast amounts of personal data to function effectively, raising significant privacy concerns. From surveillance technologies to targeted advertising algorithms, the collection and utilization of personal data without adequate consent or safeguards can infringe upon individuals’ privacy rights. Countries such as Germany and Canada have stringent data protection laws in place, emphasizing the importance of informed consent, data minimization, and user control over personal information in AI applications.

Job displacement

The automation of tasks through AI and robotics has the potential to displace millions of workers worldwide, leading to job insecurity and economic upheaval. As AI continues to augment and, in some cases, replace human labor across various industries, governments face the ethical challenge of ensuring a just transition for affected workers. Countries like Finland and the Netherlands are experimenting with innovative policies such as universal basic income and lifelong learning programs to mitigate the adverse effects of job displacement and support workforce reskilling and upskilling.

Accountability and Transparency

AI systems, particularly those employing deep learning techniques, can be highly opaque, making it challenging to understand their decision-making processes. This lack of transparency raises concerns about accountability, especially in critical domains such as healthcare and criminal justice, where erroneous or biased decisions can have profound consequences. Countries like Japan and the United Kingdom are exploring frameworks for AI governance that emphasize accountability, transparency, and explainability, ensuring that AI systems can be scrutinized and held accountable for their actions.

Autonomous Weapons

The development and deployment of autonomous weapons systems, powered by AI, pose grave ethical concerns regarding the escalation of armed conflicts and the erosion of human control over warfare. Countries diverge significantly in their approach to regulating autonomous weapons, with some advocating for an outright ban on lethal autonomous weapons systems (LAWS) and others pursuing limited regulation or military applications of AI.

Autonomy and Human Rights

AI systems with decision-making capabilities raise profound ethical questions about human autonomy and agency. In contexts such as healthcare, finance, and criminal justice, AI-driven decisions can significantly impact individuals’ lives, raising concerns about the erosion of human rights and dignity. Countries like Sweden and South Africa are exploring frameworks for AI governance that prioritize human-centric values, emphasizing the importance of human oversight and control over AI systems to uphold fundamental rights and values.

Enviroment Impact

The computational demands of training and running AI models contribute to significant energy consumption and carbon emissions, exacerbating environmental concerns such as climate change. The proliferation of data centers and high-performance computing infrastructure required for AI development further strains global energy resources and infrastructure. Countries like Norway and Costa Rica are investing in renewable energy sources and sustainable computing practices to mitigate the environmental footprint of AI technologies and promote responsible AI development.

Cybersecurity

As AI systems become increasingly interconnected and integrated into critical infrastructure, they become potential targets for cyberattacks and data breaches. Vulnerabilities in AI algorithms or systems can be exploited to manipulate outcomes, steal sensitive information, or disrupt essential services. Countries like the United States and Israel are investing in cybersecurity research and regulations to mitigate the risks posed by malicious actors and ensure the integrity and security of AI systems and data.

Misinformations and fake news

The proliferation of AI-driven content generation tools and algorithms has facilitated the spread of misinformation and disinformation online. From deepfake videos to algorithmically generated fake news articles, AI technologies can be leveraged to manipulate public opinion, sow discord, and undermine democratic processes. Countries are exploring regulatory approaches to combat misinformation and promote media literacy, emphasizing the importance of transparency and accountability in online content dissemination to safeguard public discourse and democratic norms.

Creativity and Human Autonomy

As AI systems become increasingly proficient at tasks traditionally associated with human creativity, such as art generation, music composition, and content creation, there is concern about the potential loss of human agency and creative expression. While AI can augment human creativity and productivity, there is a risk that reliance on AI-generated content may stifle innovation and diminish the diversity of creative voices. Countries are exploring policies to support human-centered creativity and artistic expression, emphasizing the importance of preserving human autonomy and fostering a thriving creative ecosystem alongside AI technologies.